Index

1. Introduction

2. MySQL Database Cluster Details

3. Steps to setup MySql Cluster

4. Testing the Cluster

5. Cluster Replication

6. Steps to setup Cluster Replication

Introduction

This document aims to provide the steps to set up a mysql database cluster. The cluster is assumed to be consisting of three nodes, one of which is management node and remaining two are data and sql nodes.

The document then goes on to explain the steps required to set up replication between two such independent mysql database clusters.

MySql Database Cluster Details

MySql Cluster is basically one or more computers configured to work in a way such that data will be shared amongst all the involved nodes. The database engine to be used for all the database tables is NDB.

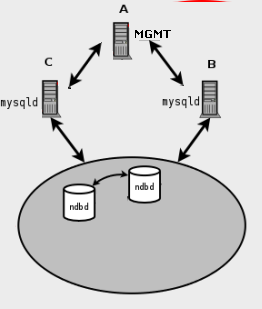

Following diagram represents a typical cluster.

Diag. 1 : Typical MySql Cluster

Steps to setup MySql Cluster

1. For the sake of simplicity, this document assumes that the cluster is set up using three database machines. The three servers are referred as Server A, Server B and Server C. also it is assumed that no mysql component exists on the server initially.

2. Open a putty session on all the three servers using ‘root’ access.

3. Also ensure that ‘/etc/hosts’ file contains entries for all the involved servers.

4. Before starting with the setup, first determine the roles to be played by each server in the cluster. For rest of document, it is assumed that Server A will act as Management Node of the cluster whereas Server B & C will act as Data and Sql nodes.

5. On server A, run the following commands to check if any mysql process is running.

Shell>ps –aef | grep mysql

Shell>ps –aef | grep ndb

If any process is running, it is better to shutdown (or kill) the process before moving ahead. Also, it should be noted that, if the process is running, then some mysql component is already installed on the server. It is better to uninstall the component and start over. For unistallation guide goto step

6. If no process is running, next check to be preformed is whether any mysql component is already installed on the server or not? Execute following command on the shell.

Shell>rpm –qa | grep –i mysql

This command will print all the mysql packages already installed on the server.

To remove this package (or all the installed packages), run the following command.

Shell>rpm –e <package_name>

It is always advised to take DB backup before removing mysql from the system.

7. Once all the installed mysql packages are removed, the server is ready to be installed with fresh new version of mysql cluster software. Download the latest mysql cluster package from mysql site. The list of packages for version mysql-5.1.51 ndb-7.1.9 is given below.

MySQL-Cluster-com-client-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-clusterj-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-debuginfo-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-devel-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-embedded-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-extra-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-management-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-server-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-shared-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-shared-compat-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-storage-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-test-7.1.9a-1.rhel4.i386.rpm

MySQL-Cluster-com-tools-7.1.9a-1.rhel4.i386.rpm

8. Install all the above mysql packages except for ‘MySQL-Cluster-com-server-7.1.9a-1.rhel4.i386.rpm’ and ‘MySQL-Cluster-com-shared-compat-7.1.9a-1.rhel4.i386.rpm’. Run following command to install the package.

Shell>rpm –ivh <package_name>

You might need to clear all the dependencies to install the packages.

9. Once the packages are installed, go to ‘mysql’ directory. This should be available under ‘/var/lib’. In case it is not present here, search for this directory in the system. You can use following command to search the system.

Shell>find / -name “mysql”

10. On the same level as this directory, there should be another directory named ‘mysql-cluster’. Move inside ‘mysql-cluster’.

11. Create a text file named ‘config.ini’ in this directory. The contents of the file are given below. Meaning of each parameter is also explained in comments.

[NDBD DEFAULT]

NoOfReplicas=2 #Number of data and sql nodes in the cluster. In #our case this is 2.

[MYSQLD DEFAULT]

[NDB_MGMD DEFAULT]

[TCP DEFAULT]

# Managment Server

SendBufferMemory=8M

ReceiveBufferMemory=8M

[NDB_MGMD]

# the IP of THIS SERVER

HostName=<Server A’s IP>

[NDBD]

# the IP of the FIRST SERVER (Data Node)

HostName=<Server B’s IP>

DataDir=/var/lib/mysql-cluster

[NDBD]

# the IP of the FIRST SERVER (Data Node)

HostName=<Server C’s IP>

DataDir=/var/lib/mysql-cluster

[MYSQLD]

[MYSQLD]

12. Now move to Server B. Re-execute steps 4,5 and 6 on the server.

13. Once all the packages are available install all the packages except for ‘MySQL-Cluster-com-management-7.1.9a-1.rhel4.i386.rpm’ and ‘MySQL-Cluster-com-shared-compat-7.1.9a-1.rhel4.i386.rpm’. You can use same command given in step 7 to install the packages.

14. Once the packages are installed, go to /etc directory. Create/Edit ‘my.cnf’ file in this directory. The content of typical my.cnf is given below.

[mysqld]

ndbcluster # run NDB engine

ndb-connectstring=<Server A’s IP> # location of MGM node

datadir=mysql directory on Server B>

#socket=/lib/mysql/mysql.sock

user=mysql

# Default to using old password format for compatibility with mysql 3.x

# clients (those using the mysqlclient10 compatibility package).

old_passwords=1

default_character_set=utf8

collation_server=utf8_unicode_ci

character_set_server=utf8

skip_character_set_client_handshake

back_log = 75

max_connections = 500

key_buffer = 1G

myisam_sort_buffer_size = 64M

join_buffer_size = 1M

read_buffer_size = 1M

sort_buffer_size = 2M

table_cache = 1800

thread_cache_size = 384

wait_timeout = 7200

connect_timeout = 10

tmp_table_size = 64M

max_heap_table_size = 64M

max_allowed_packet = 64M

max_connect_errors = 1000

read_rnd_buffer_size = 524288

bulk_insert_buffer_size = 8M

query_cache_limit = 4M

query_cache_size =52428800

query_cache_type = 1

query_prealloc_size = 65536

query_alloc_block_size = 131072

default-storage-engine = MyISAM

log-slow-queries

long_query_time = 5

[mysqld_safe]

nice = -5

open_files_limit = 8192

[mysqldump]

quick

max_allowed_packet = 16M

[myisamchk]

key_buffer = 256M

sort_buffer = 64M

read_buffer = 64M

write_buffer = 64M

#options for ndbd process:

[MYSQL_CLUSTER]

ndb-connectstring=<Server A’s IP> # location of MGM node

15. Repeat the steps 11, 12 and 13 for Server C.

16. Once this step is done, the cluster configuration is done. The cluster of three servers A, B & C is now ready.

Testing the Cluster

1. Once the cluster is configured using steps given above, we will move to testing of the cluster.

2. On the Management Node (i.e. Server A), run the following command.

Shell>ndb_mgmd –f <path of config.ini file>

3. This is should start the management node of the cluster. Run the following set of commands to check the status of the cluster.

Shell>ndb_mgm

— NDB Cluster — Management Client —

ndb_mgm>show

The ‘show’ command will show the status of all the nodes in the cluster. Initially this would be ‘not connected’.

4. Now move to Server B and C respectively and execute following command on these servers.

Shell>ndbd –initial –connect-string=

5. This should start the node B and C and put them in the cluster. Now go back to Server A and re-run the show command. This will now show the ndbd nodes as connected and started.

6. Once the nodes are started and put in cluster, all that remains is starting mysql daemon on sql nodes so that they can start sharing and replicating the data. To achieve this, go to Server B and C and run following command.

Shell>mysqld –u root &

7. Once the mysql daemon starts gracefully, the cluster is fully functional and operating. The ‘show’ command on Server A will now display accordingly.

8. You can now test this cluster. Try creating any table on any of the database on one of the sql nodes (Server B or Server C). Please take care to use ‘NDB’ engine to create the table. This should get replicated to another node in no time. All the insertion, updates and deletions will also get replicated amongst the nodes.

Cluster Replication

The cluster replication refers to the configuration such that data is shared between two independent clusters. This configuration allows the data nodes of cluster 1 to communicate and send/receive db transactions from data nodes of cluster 2, so that the data on all the data nodes of both the clusters is always the same.

There are various ways of implementing this. The way, we have adopted for NGMP is depicted in the following diagram

Diag. 2 : Cluster Replication Setup

Here we have two clusters viz. Cluster 1 and Cluster 2 with 2 data and sql nodes each. The node A of cluster 1 acts as master to node Y of cluster 2.

Similarly, node Z of cluster 2 acts as master to node C of cluster 1.

This way, any data arriving on any of the node gets to all the remaining nodes in all the clusters.

Steps to setup Cluster Replication

1. For the sake of explanation, it is assumed that, we have already set up two clusters. Each cluster has three nodes, with one of them as management and remaining two as data and sql nodes. Cluster 1 has Server A as management node and Server B & C as data and sql node. Cluster 2 has Server X as management node and Server Y & Z as data and sql node.

2. Before setting up the replication, it is advised to determine which nodes will act as master to which nodes. The configuration we have explained here assumed that Node B is mater to Node Y and Node Z is master to Node C.

3. Shutdown (or kill) mysql daemon running on node B and node Y.

4. Open /etc/my.cnf on node B (Server B) for editing. Add following lines to the [mysqld] section of the file.

log-bin

binlog-format=row

server-id=10

5. Similarly, open the /etc/my.cnf on the node Y (Server Y). Add following lines to the [mysqld] section of the file.

server-id=20

master-host=<Server B’s IP>

master-port=3306

master-user=<username>

master-password=<password>

Please note username as password can be set to any appropriate value.

6. Start the mysql daemon on both the nodes using following command.

Shell>mysqld –u root &

7. Once the mysqld comes up gracefully on both the servers, go to node B and start the mysql client. On mysql prompt, execute the following command.

Mysql>GRANT REPLICATION SLAVE TO *.* ON ‘<username>’@’<Server Y’s IP>’ IDENTIFIED BY ‘<password>’

The username and password should be same as mentioned in the my.cnf file.

8. Now start the mysql client on node Y and execute following command.

Mysql>Slave start;

9. This should start the replication between the nodes B and Y with node B as master.

10. Execute similar steps on node Z and node C to set up replication between them with node Z as master.

11. Once this is done, the replication between the clusters is set up. We can check this by inserting any data in anyone node of the two clusters and checking if the db transaction gets replicated across the clusters on all nodes.

12. To further check the replication status, go to any master node (Node B or Node Z) and execute following query.

Mysql> SELECT * FROM mysql.ndb_binlog_index;

+———-+——————-+—————–+———+———+———+———–+—————-+————+——-+

| Position | File | epoch | inserts | updates | deletes | schemaops | orig_server_id | orig_epoch | gci |

+———-+——————-+—————–+———+———+———+———–+—————-+————+——-+

| 554 | ./win3-bin.000003 | 137073881251848 | 1 | 0 | 0 | 0 | 0 | 0 | 31915 |

+———-+——————-+—————–+———+———+———+———–+—————-+————+——-+

1 row in set (0.00 sec)

Please note the value of ‘epoch’ filed.

13. Now execute following query on the respective slave

Mysql> SELECT * FROM mysql.ndb_apply_status;

+———–+—————–+—————–+———–+———+

| server_id | epoch | log_name | start_pos | end_pos |

+———–+—————–+—————–+———–+———+

| 10 | 137073881251848 | win3-bin.000003 | 554 | 885 |

+———–+—————–+—————–+———–+———+

1 row in set (0.00 sec)

The ‘epoch’ value here should match that with the master. If so, replication is working fine.

14. If the epoch value does not match, please execute the set of queries on the slave

Mysql>SLAVE STOP;

Mysql>CHANGE MASTER TO MASTER_HOST='<MASTER’s IP>’, MASTER_PORT=3306, MASTER_LOG_FILE = ‘<VALUE OF File FIELD IN ndb_binlog_index TABLE ON MASTER>’, MASTER_LOG_POS=<VALUE OF Epoch FIELD IN ndb_binlog_index TABLE ON MASTER>;

Mysql>SLAVE START;

15. This should resolve the issue. This concludes configuration and testing of Mysql database cluster and cluster replication.

MySQL Cluster Setup and Replication between NDB and Non NDB … | mysql

Mar 04, 2011 @ 14:58:15

MySQL Cluster Setup and Replication between NDB and Non NDB … | unixsecure secondary

Mar 05, 2011 @ 22:45:54

Apr 27, 2011 @ 13:34:18

the document is very good i will try to setup cluster on my servers ok

plz reply to me. i need ur email id for further clarifications.

thanks&Regards

Ravi kiran.P

May 10, 2011 @ 17:37:25

Best instructions I have seen so far for “Creating Cluster on MySQL”.

Can you please elaborate that if I import non-clustered DB to the NDB, cluster DB, do I have to do any thing different? Please send me you emal to discuss further?

\Thanks a million to share this

Ali Jafri…

May 11, 2011 @ 08:25:12

Hi..this tutorial is so so so so amazing… it really helped us a lot in MySQL Clustering..Thank u so much… 🙂

May we know where we can find or read your other blogs? 😀

May 26, 2011 @ 15:50:58

Hi… can anyone please tell me one thing… im using ubuntu 11.04. I have setup the required nodes and they all connect with each other. I can see them in the management console. The problem i face here is when i do a SHOW ENGINES there’s no NDB or NDBCLUSTER engine in the list…

im using MySQL Cluster 7.1

MySQL cache and memcache | 阿喵就像家

Jun 28, 2011 @ 15:13:27

May 07, 2012 @ 13:17:08

When I enter ndbd –initial –connect-string= on the node, im getting the following error

#ndbd –initial –connect-string=

Unable to connect with connect string: nodeid=0,localhost:1186

Retrying every 5 seconds. Attempts left: 12 11

Could any one tell me why ?

Jan 18, 2013 @ 15:20:15

i have some confusion that it is necessary that server required for setup cluster

Jun 28, 2013 @ 09:47:50

hi i would like to enable replication, but my point is like i have cluster one management node and two data, 2 sql nodes. i would like to set up a replication like i want to replicate data in to single server from the cluster instead of setting one more cluster. is it possible.

my set up is like this that A IS MGM NODE and B,C are data and sql nodes and i want replicate all the events to Z with out a cluster.pl let me know the possibilities and issues..

thanks

Dec 08, 2013 @ 17:05:49

My testdb setup crashed due to low disk space on root dirve on my linux systems.

Does removing both the databases on Master & slave & also removing Bin log files from both the servers have any negative impact on replication If I create the databases with the same name in future.